Table of Contents

Share this article:

Kubernetes is an open-source project for managing containerized applications/services, it’s known for its ability to provide portability, scalability, and high availability. However, Kubernetes is resource-intensive, which often translates to higher cloud costs. Additionally, Kubernetes has a steep learning curve for deployment and active management and the complex process of cluster upgrades presents a significant roadblock. These factors make Kubernetes adoption challenging and complex, particularly for bare metal users and those deploying services on edge devices like Raspberry Pi. In this blog, we’ll explore a lightweight and easy-to-manage Kubernetes distribution i.e. K3s. We’ll look at how to deploy K3s, its use cases, and its advantages and disadvantages.

What is K3s?

Rancher introduced K3s as a lightweight Kubernetes distribution. K3s is a CNCF-certified Kubernetes distribution, which comes into a binary file of less than 100 MB. The K3s achieve their lightweight nature by removing bloats (nice to have) modules in the original Kubernetes. The minimum resource requirement for running a K3s Kubernetes cluster is 512 MB of RAM and 1 CPU core. Some of the other highlights of K3s are the quick installation of production-grade clusters, automated cluster upgrades, and minimum hardware requirements. To provide flexibility to users K3s combines technologies like Continered & runc, Flannel for CNI, CoreDNS, Metrics Server, Traefik for ingress, and Helm Controller.

The lightweight nature and flexibility offered by K3s enable users to run production-grade Kubernetes clusters on resource-constrained environments like IoT devices such as Raspberry Pi. K3s turns out to be a great Kubernetes distribution choice when the requirements are lightweight, secured, simplified, and optimized for multiple architectures.

K3s Architecture

K3s architecture has two main components: a K3s Server node and a K3s Agent node. The K3s architecture is quite similar to that of the original Kubernetes architecture.

The Server node of K3s includes the following Kubernetes components:

- Supervisor: Acts as the main process manager for K3s while monitoring and maintaining the health of other K3s components.

- API Server: It’s a control plane component that exposes the Kubernetes API and serves as the front-end interface for the Kubernetes control plane.

- Kube Proxy: Maintains network rules on nodes to allow network communication to pods while implementing part of the Kubernetes Service by handling network routing.

- Scheduler: The Scheduler is responsible for evaluating the nodes and placing the pod on a node with sufficient system resources i.e. CPU, memory, and storage, or any specific constraints that are set by the user.

- Controller Manager: Runs controllers that handle routine tasks in the cluster while managing node lifecycle, replication, endpoints, and service accounts.

- Kubelet: The primary node agent runs on each node and ensures containers are running in a pod and healthy.

- Container Runtime (containerd): Manages the container lifecycle (creating, starting, stopping containers) and handles container image management and storage.

- Kine (database storage for K3s): Acts as a database backend replacement for etcd and provides a API translation layer to support SQLite, PostgreSQL, or MySQL as storage options.

- Flannel: Provides a layer 3 IPv4 network between multiple nodes in the cluster while managing the allocation of subnet leases to each host.

The Agent node of K3s includes similar components to the server node, except a Tunnel Proxy. That establishes secure tunnels between agent nodes and the server while enabling secure communication across the cluster.

K3s Vs K8s

K3s is well known for its lightweight nature and capability to run production-grade Kubernetes clusters on bare minimum hardware requirements i.e. edge devices.

Let’s discuss what features we get with the K3s Kubernetes cluster:

Lightweight

All components of K3s are packed into a single 100MB binary file and run as a single process. K3s can be quickly installed over the-edge devices which can also provide high availability of services.

Secure

K3s comes with a secrets-encrypt CLI tool that enables automatic control over:

- Disabling/Enabling secrets encryption

- Adding new encryption keys

- Rotating and deleting encryption keys

- Re Encrypting secrets

Moreover, K3s come with a minimal set of external dependencies which reduce the surface area for attackers making it a more secure Kubernetes distribution.

Easy Kubernetes Upgrades

K3s gives us two options to handle the Kubernetes cluster upgrades, the most complex and crucial task.

Quick Automated Updates: You can manage K3s automated upgrades using the Rancher’s system-upgrade-controller, and follow the K3s documentation for further process.

Quick Manual Updates: For manual upgrades, you can install the upgrade script of K3s or manually install the binary of the desired version, and follow the K3s documentation for further process.

Production Ready

K3s can get production-ready Kubernetes cluster within a few minutes, as it comes with built-in integrations like:

- Containerd (CRI)

- Flannel (CNI)

- Core DNS

- Traefik Ingress controller

- ServiceLB (Load-Balancer)

- Kube-router

- Local-path-provisioner

- Host utilities (iptables etc)

Moreover, as I’ve already mentioned K3s is lightweight and all components are packed into a single binary file. This structure of K3s makes it easy to quickly provision with all the required components.

Quick Guide for K3s Installation

Let’s fire up our labs and start hands-on for K3s, in this section we will be installing K3s over an Ubuntu machine and connecting a node to it.

Step 1: Setting up an environment

To start with the installation of K3s we need a VM with Linux as an operating system. For this tutorial we will be using an EC2 instance with Ubuntu V-24.0, we will be launching two EC2 instances, one will be the server node and the other will be the agent node. You can follow the AWS documentation to start the EC2 instance.

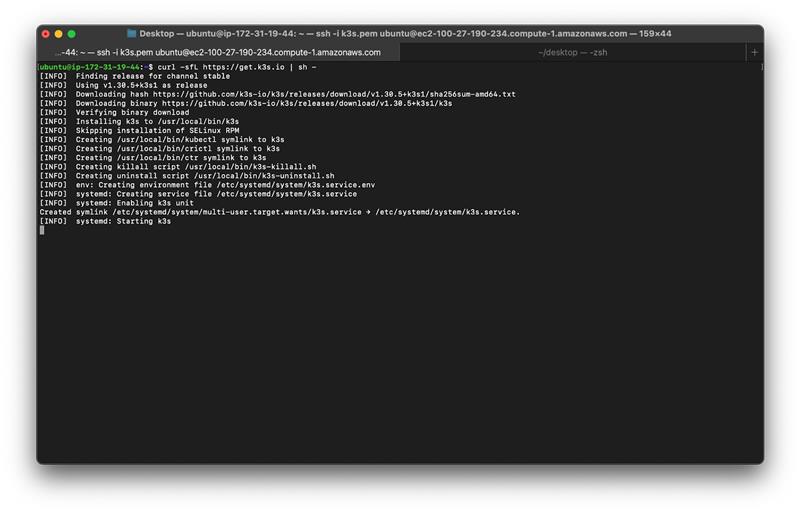

Step 2: K3s Server Node Installation

- To fire up the server node K3s provides an installation script which is quite convenient and provides a quick installation.

Run the following command to install the K3s cluster: curl -sfL https://get.k3s.io | sh

- Once the installation of K3s is completed, you can execute the kubectl commands directly. Just do: kubectl get pods -n kube-system

- Here, you will be able to visualize all the required components and their pods needed for the production-grade Kubernetes clusters

Step 3: K3s Agent Node Installation

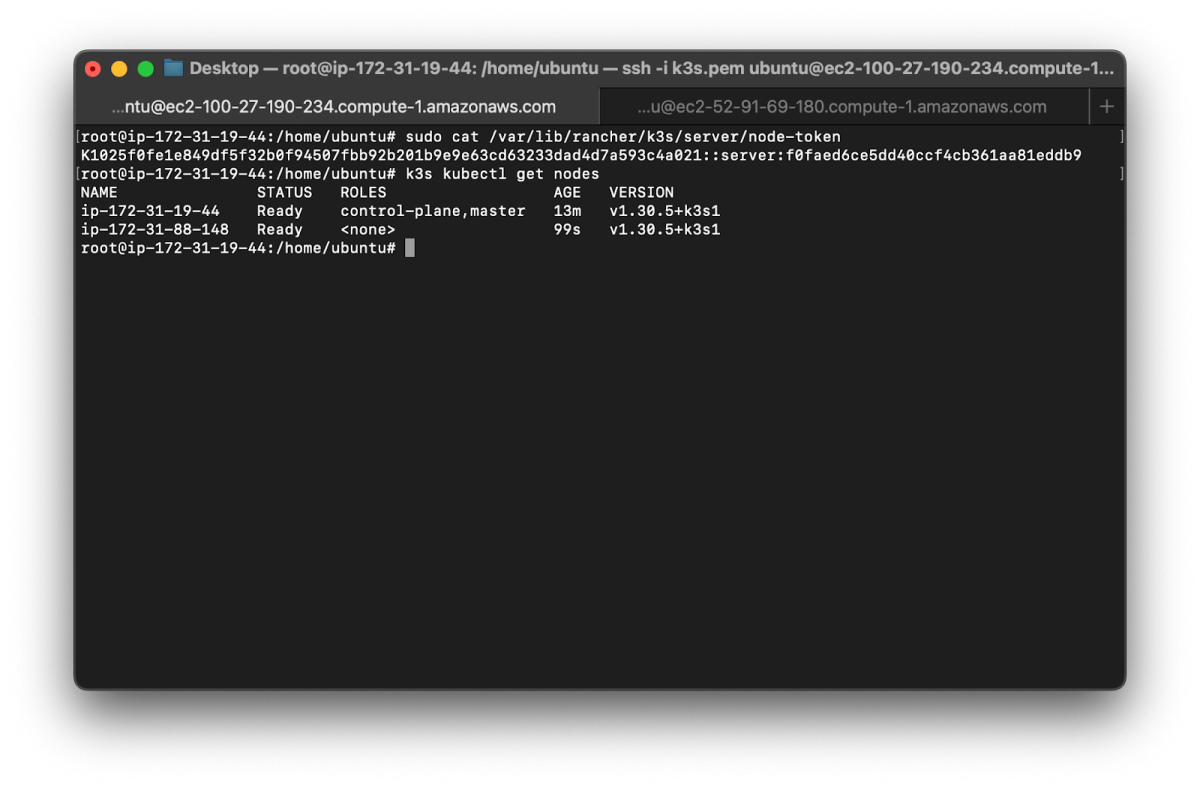

- Let’s deploy an agent node for K3s, for that, we need serverIPand node-token to locate the server node and connect with it.

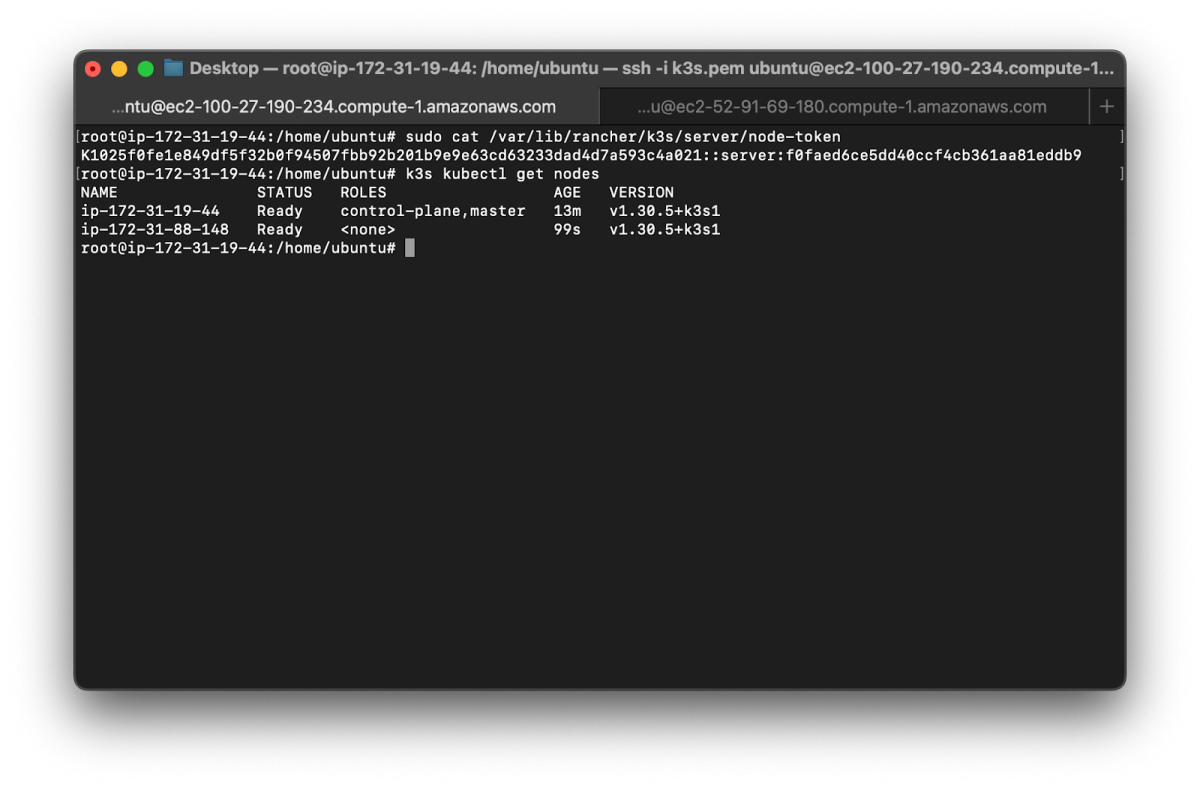

- To generate the node-token navigate to your server node of K3s and execute the following command: sudo cat /var/lib/rancher/k3s/server/node-token

- This command will get you a node-token which can be used to connect the k3s agent node.

Once you have serverIP and node-token, you need to execute the following command at the K3s agent node. curl -sfL https://get.k3s.io | K3S_URL=https://<MASTER_IP>:6443 K3S_TOKEN=<NODE_TOKEN> sh –

- Now you can navigate to the server node and execute kubectl get nodes, you should be able to see the node connected.

Step 4: Deploying Application to K3s

- Let’s deploy an application to our K3s Kubernetes cluster, we have created a deployment.yaml for nginx. To deploy the nginx execute the command: kubectl apply -f nginx-deployment.yaml

Once the application is deployed, you can run kubectl get pods to visualize the Kubernetes pods created.

Pros and Cons of K3s

Complexities of Kubernetes

We have now discussed what K3s is, how it’s different from K8s, and what features it provides on top of K8s. K3s is another distro of K8s. It simplifies deploying Kubernetes clusters. But, it inherits K8s’s known complexities. Managing complex Kubernetes clusters with traditional tools like kubectl is tedious and error-prone. This is due to a lack of visibility and constant context switching. I list out the complexities and challenges often faced by teams while operating through CLI tools:

- Limited Visibility Across Clusters: Command-line tools often lack insights into multiple cluster states. This makes it hard to monitor system health and performance.

- Complex User Access Management: Managing user permissions and roles across clusters through CLI becomes difficult, increasing the chances of compromising security.

- Configuration Management Difficulties: Maintaining consistent configurations across various environments can be error-prone and time-consuming.

- Scalability Challenges: As clusters grow, manual CLI-based management becomes increasingly cumbersome, making it difficult to efficiently scale operations and maintain system reliability.

K3s with Kubernetes Dashboard by Devtron

We can eliminate the above complexities with a Kubernetes dashboard. It provides visibility across multiple clusters and improves efficiency.

In this blog, we will see how the Kubernetes dashboard by Devtron can help us manage our multiple K3s clusters. Devtron’s Kubernetes dashboard stands out. It offers granular visibility across our multiple K3s clusters. Devtron also provides complete 360-degree visibility for applications deployed into these Kubernetes clusters. Devtron’s Kubernetes dashboard solves access management issues in Kubernetes. It offers a GUI and multiple SSO solutions for quick, secure access. It also provides robust, fine-grained access control. Also, for easy troubleshooting, Devtron streams the logs of Kubernetes objects. It has an integrated terminal, so users can run commands in pods.

Let’s examine some major features of Devtron’s Kubernetes dashboard. We’ll see how to manage our K3s cluster and its workloads using Devtron’s Kubernetes dashboard.

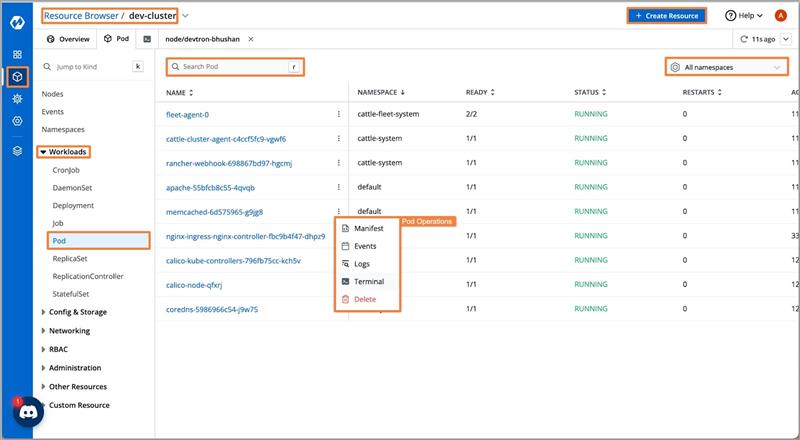

Visibility Across Clusters

Devtron’s Resource Browser provides us with granular visibility of our K3s cluster, where we can visualize each node, namespace, workload, and all other resources of our cluster.

The Kubernetes dashboard by Devtron enables us to take action quickly by providing capabilities like dedicated terminal support for troubleshooting across the nodes/pod and, the capability to Cordon, Drain, Edit taints, Edit node config, and Delete. Similar types of capabilities can also be found to manage the Kubernetes workloads i.e. Pods, Deployments, Job, etc.

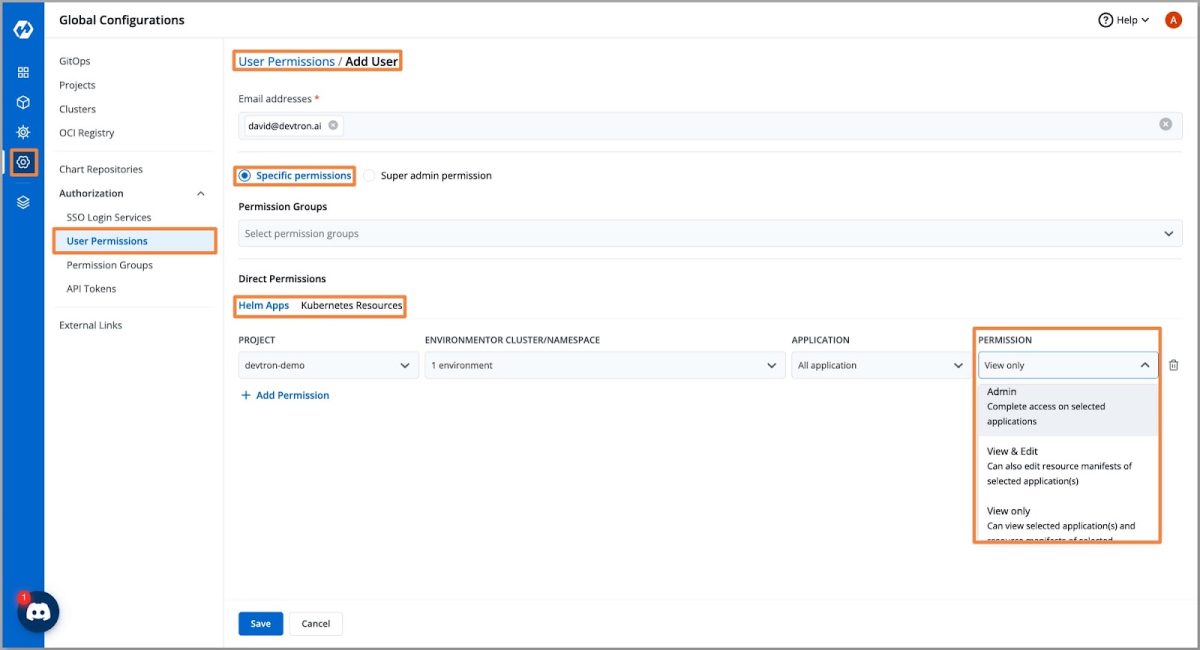

User Access Management

Devtron enables us to manage robust RBAC configuration, by allowing granular access control for Kubernetes resources. We can create Permission Groups with predefined access levels and easily assign them to new users. Devtron also provides us support for SSO integration with several different options, streamlining access management and eliminating the need for separate dashboard credentials.

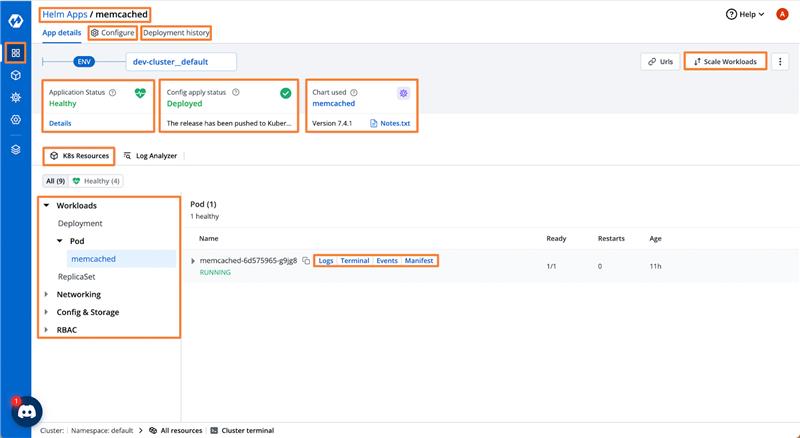

Application Management over K3s Kubernetes Cluster

The Devtron dashboard provides us with the live status of our applications and provides a logical separation for Kubernetes resources of the application deployed, which provides ease in managing applications. In case the application needs troubleshooting Devtron provides support for launching the Terminal, checking logs, Events, and Manifets.

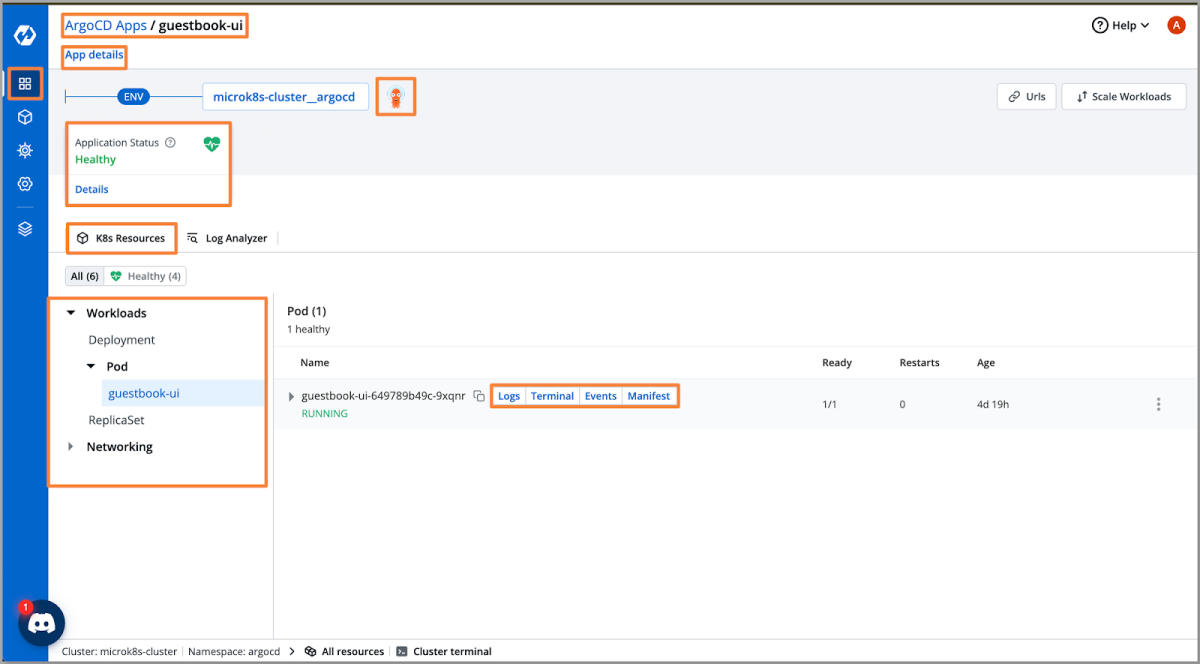

For Argo CD applications, Devtron’s Kubernetes dashboard offers a single, unified dashboard for applications across multiple clusters, eliminating the need for context switching between instances. The dashboard provides a clear visualization of the Kubernetes application resources by separating them logically. Users are now taking a look at the logs, events, and live manifest of the application. This clear visualization streamlines operations and reduces errors.

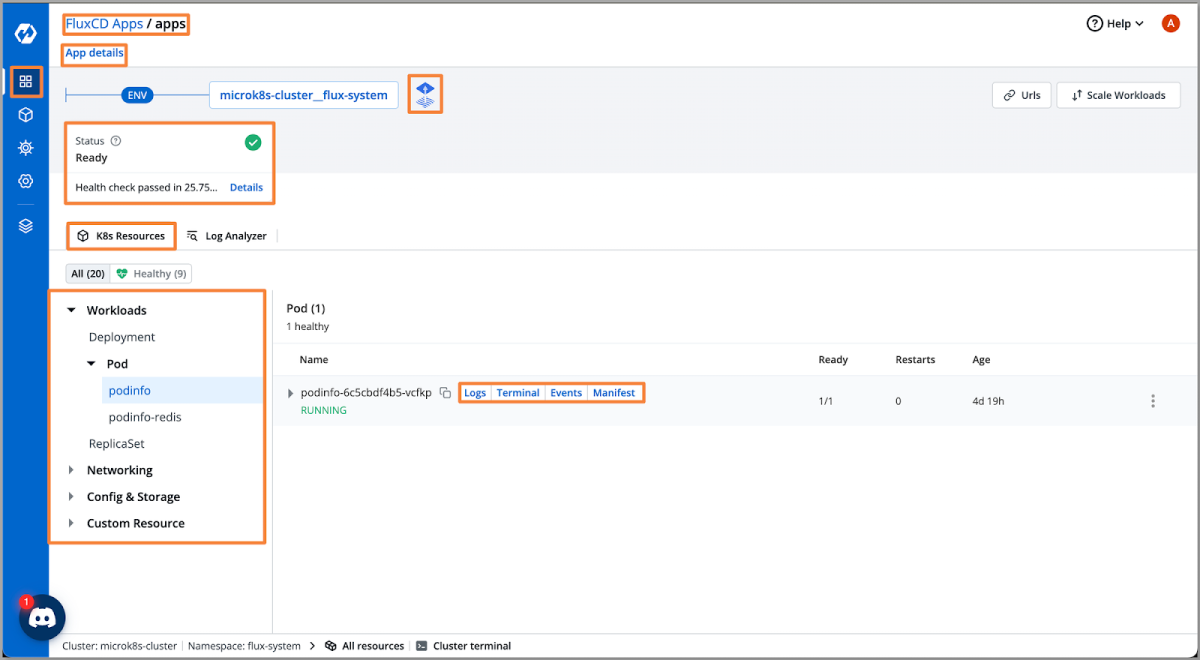

For Flux CD applications, Devtron’s Kubernetes dashboard provides a UI, which can be used with a combination of the CLI-only Flux CD. The Devtron interface provides complete visibility of the applications and their Kubernetes resource while bridging the gap between CLI power and visual intuitiveness

Configuration Management for Applications

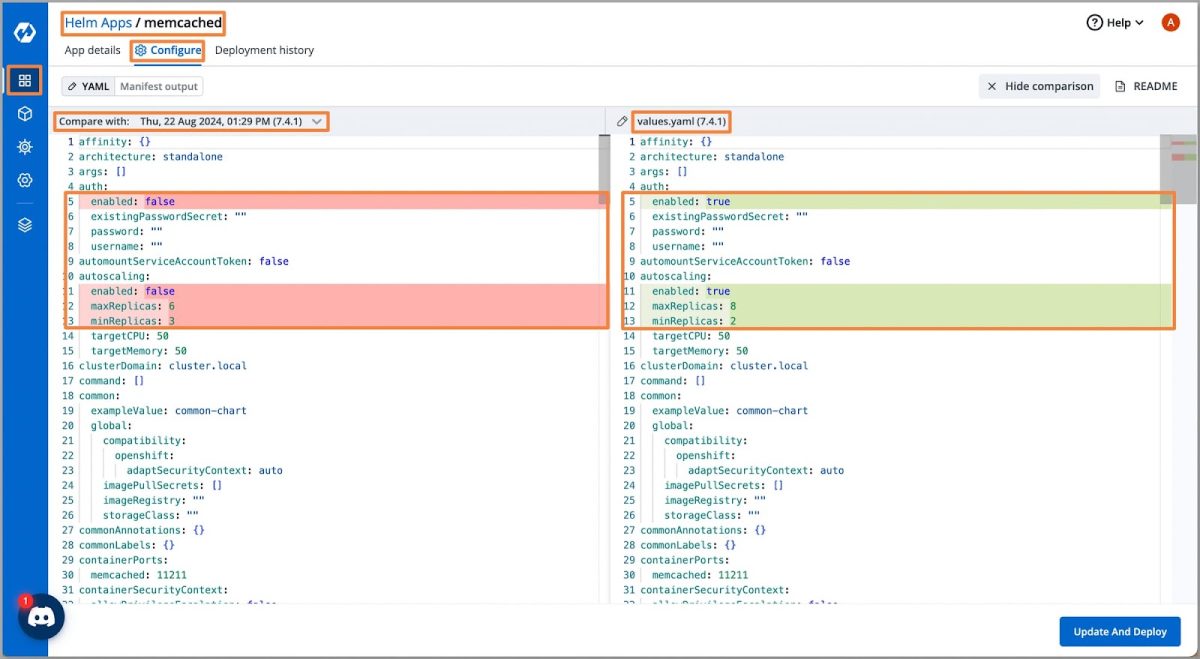

The major challenge while managing Kubernetes using CLI tools is not having visibility and capability to compare the configuration differences between versions. Devtron allows us to compare configurations of previous deployments with the newer ones. Also, we can have the audit logs of deployments through Deployment history.

That’s it finally now we have a secure and production-grade Kubernetes cluster using RKE2 and a robust Kubernetes dashboard by Devtron to manage our Kubernetes cluster and workloads. Now we can quickly deploy the fleet of our K3s cluster on-premises and manage them using Devtron’s Kubernetes dashboard where K3s provides easy installation, version upgrades, and secure Kubernetes environments. Devtron’s Kubernetes dashboard provides capabilities to manage the Kubernetes complexities by offering visibility across multiple clusters, fine-grained access control, application management, handling configuration diff, and last but not least troubleshooting capabilities.

Conclusion

K3s emerges as a powerful lightweight alternative to vanilla Kubernetes, offering a production-grade solution that’s well-suited for edge computing, IoT devices, and resource-constrained environments. While it maintains core Kubernetes functionality in a compact 100MB binary, K3s simplifies cluster management through features like automated upgrades, built-in security tools, and reduced dependencies. When combined with management tools like Devtron’s Kubernetes dashboard, teams can effectively overcome the inherent complexities of Kubernetes management while maintaining the benefits of a lightweight, secure, and efficient container orchestration platform.